The amount of data is continuously increasing, and many companies are already leveraging the high economic value of the information hidden inside. Edge devices in particular, such as mobile phones and vehicles, with their large number of sensors, are producing more and more data, offering great potential for innovation.

One hurdle is that the data often has to be evaluated in real time on site, but the computing effort can be enormous. It is essential to look for efficient solutions. Efficiency can be defined as speed, power consumption, model size and/or much more.

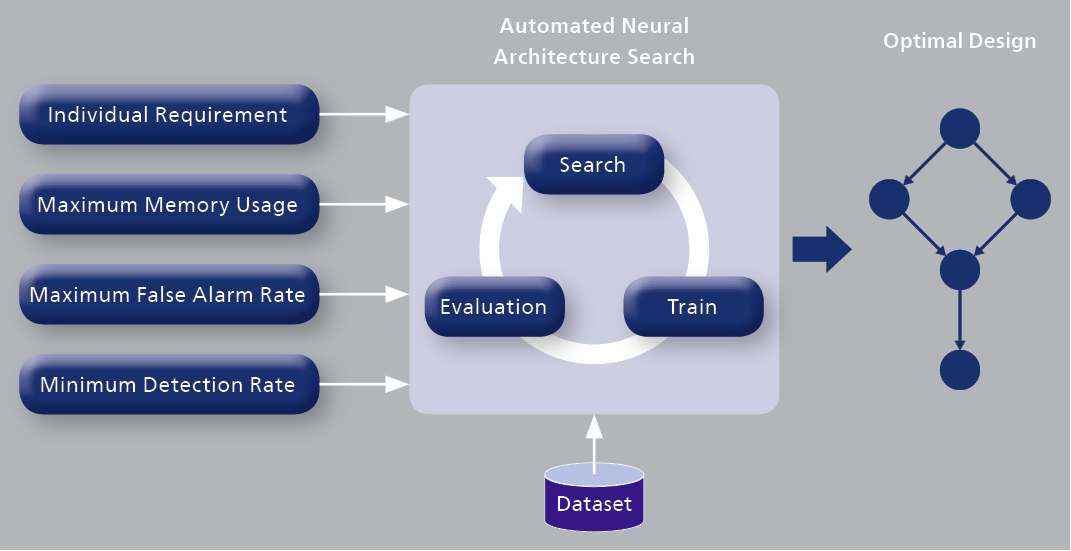

However, one of the most difficult and time-consuming aspects is the design of an optimal neural network architecture that meets all criteria. To create such optimal architectures, you need senior deep learning scientist with lots of experience.