The quality of algorithms is predominantly related to the amount of data available. In recent years, the availability of data has increased rapidly. In particular, the Internet of Things (IoT) generates large amounts of data from millions of devices. This abundance of data makes it possible to train better and better machine learning models. In practice, however, making this data available to train a centralized model can be problematic due to regulatory restrictions or technical hurdles in transmitting large amounts of data over low bandwidths. One solution to these challenges is federated learning.

FACT – Federated Aggregation and Clustering Toolkit

Federated Learning Framework

Federated Learning

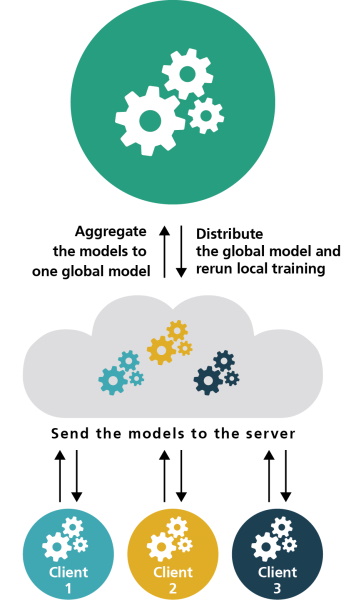

Here, all training data is stored exclusively on local devices or clients and model training is decentralized. Clients receive a model and improve it by learning from their local data. A summary of a client's updated model is sent to a server via encrypted communication. There, it is merged with updates from other clients to form a common global model.

Federated Learning with FACT

The Federated Aggregation and Clustering Toolkit (FACT) framework provides basic federated learning functionality with the added flexibility of various aggregation and clustering algorithms. It thus enables rapid development of distributed learning systems. It is used to train machine learning models without the need to centralize or aggregate data.

FACT allows for easy implementation of federated learning and supports common machine learning frameworks such as Scikit-learn or Keras. It is also modular and extensible. It can be adapted for different use cases.

FACT uses Fed-DART, a distributed runtime environment we developed in »High Performance Computing« to allow easy switching between data science experiments or simulations and a scalable production environment.

FACT differs from existing frameworks in that it offers several options for aggregation and clustering. Both are important components of distributed learning algorithms that have a large impact on final model performance. Different aggregation methods can be used to control the extent to which local updates affect the global model. This particularly benefits scenarios where client data is highly heterogeneous. In addition, a flexible choice of clustering method allows local models to be combined more intelligently, potentially resulting in multiple global models that are individually better suited to heterogeneous groups of customers.

Fact in Practice

Our current applications focus on so-called »Silo Learning«. This means that, in contrast to other well-known application areas such as Google search autocompletion, we do not learn on very many small devices. Instead, we have comparatively few data sources (clients), but each has a large amount of data. A concrete example can be the conspicuity detection for billing verification.

The starting point is several companies in the same industry that want to check invoices with the help of machine learning methods, as we frequently implement in our business area of Accouting Audit. A cooperation of the companies can bring advantages for all, since e.g. fraud patterns only become visible through cooperation. However, each company also wants to retain its own data sovereignty, and merging the settlements into one data pool is out of the question.

Federated learning makes both possible. First, conspicuity detection models are trained locally at each individual company. Then, the learned models are aggregated centrally. The knowledge gained from the aggregation of the individual models is then fed back locally.

Example Project »Bauhaus Mobility Lab«

FACT is used in the Bauhaus Mobility Lab (BML). Here, the framework has already been integrated and applied in the project environment under realistic conditions.