Abstract

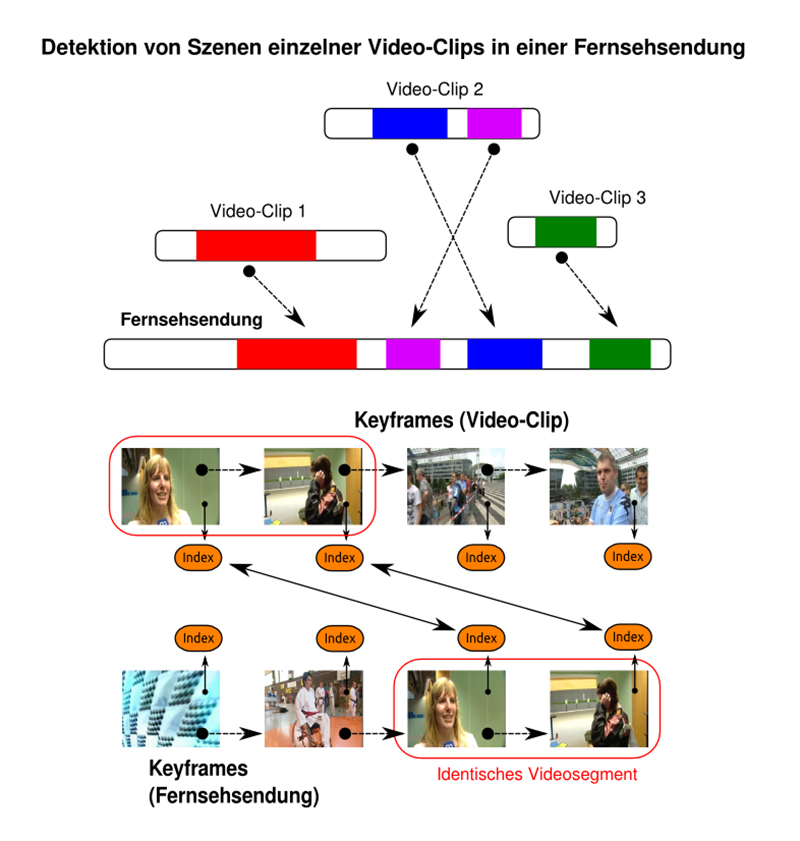

We have developed a solution for the detection of video scenes from different video clips in TV shows. Our project partners are a major German TV company (Bavarian Broadcasting) and AVID, which is a large audio and video production company.

Project Description

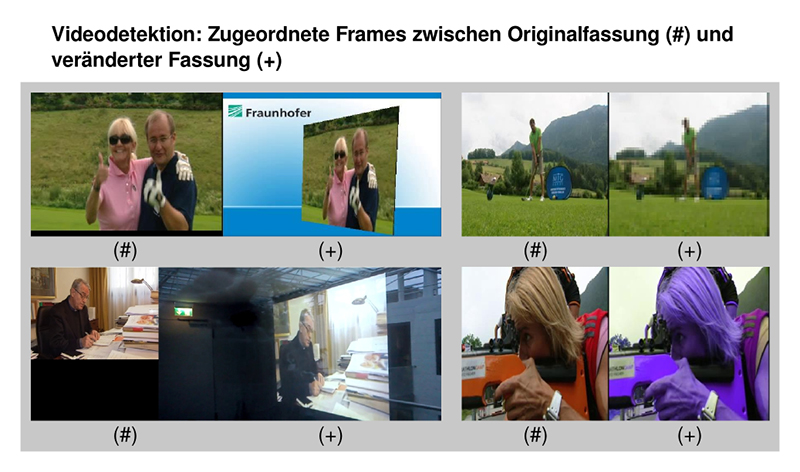

TV companies and broadcasters have a very large number of video clips, which are used for the production of TV shows. During the editing process the videos are often altered. Many scenes are shortened and the scene order is rearranged in some cases. Also, the video frames can be modified in various ways.

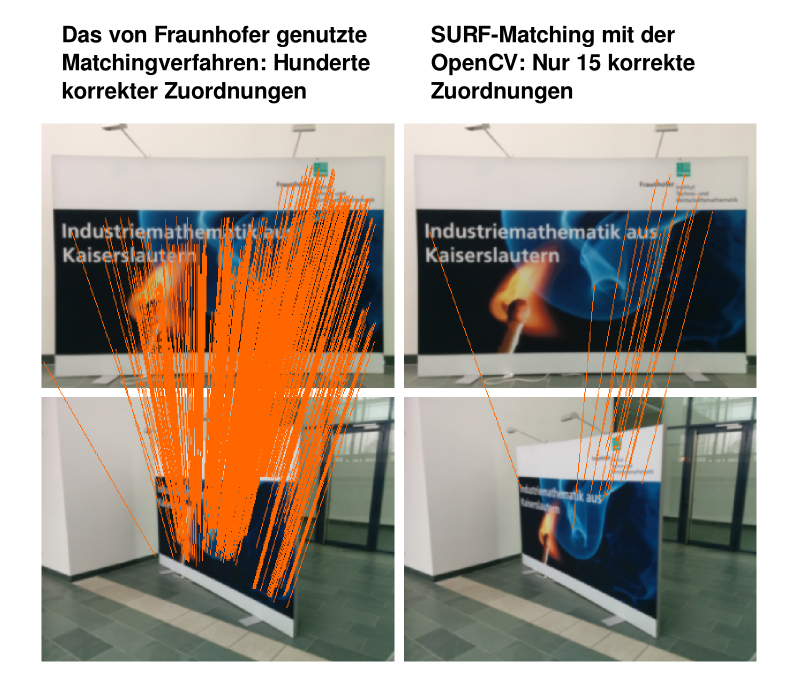

Typical examples are the insertion of TV logos, subtitles and patterns. Other modifications include a change of resolution, aspect ratio, sharpness, color and contrast changes. Sometimes, a video is also processed by a series of complex transformations. For example, if a video is projected onto a screen in a virtual TV studio using chroma keying technology. Such effects are frequently used in the production of news shows. These modifications make it very difficult to automatically detect and match the scenes from the original video clips and their modified versions in the TV shows.