GaspiLS is our scalable linear solver library, which has already proven itself in industrial companies in times of exascale computing. Many simulations in engineering are based on Computational Fluid Dynamics and Finite Element Methods (CFD and FEM methods), for example the determination of aerodynamic properties of aircraft or the analysis of building statics. A large part of the computational time is required for solving the underlying equations by iterative methods such as the Krylov Subspace. The performance of the iterative solvers used thus has a large impact on the overall runtime of such simulations. To gain faster insights from these simulations, we have developed the linear solver library GaspiLS.

Industry Relies on Gaspils Due to Better Scalability

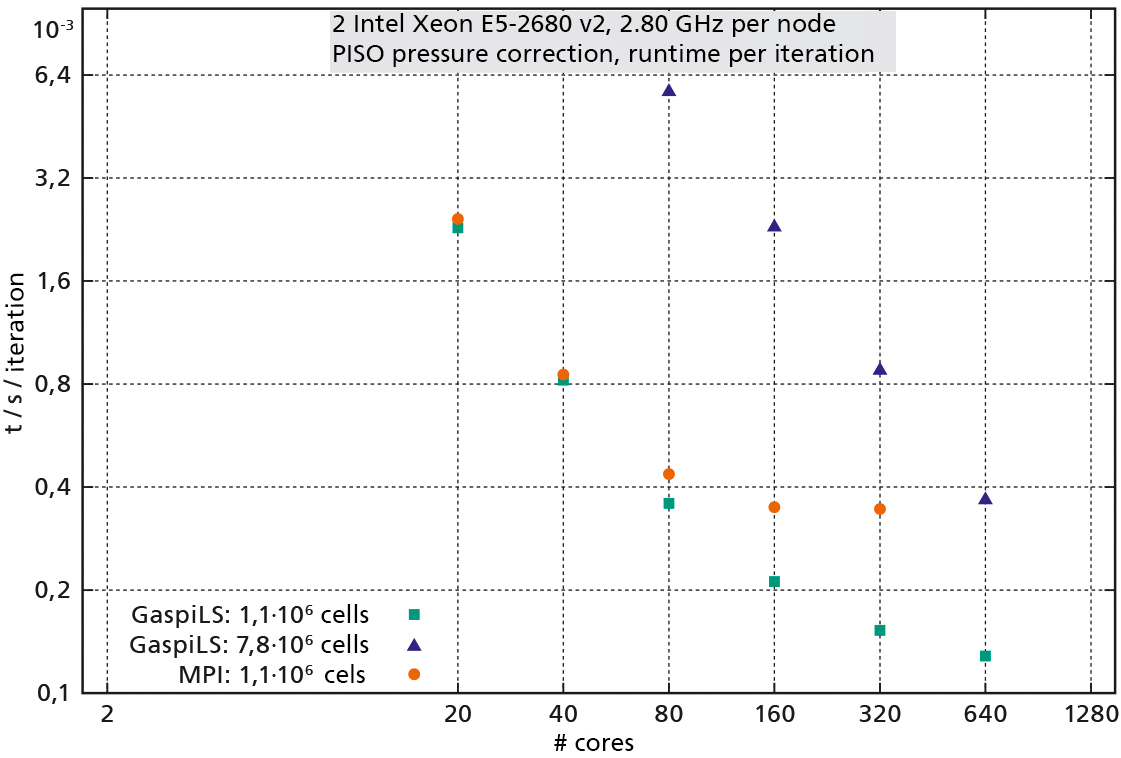

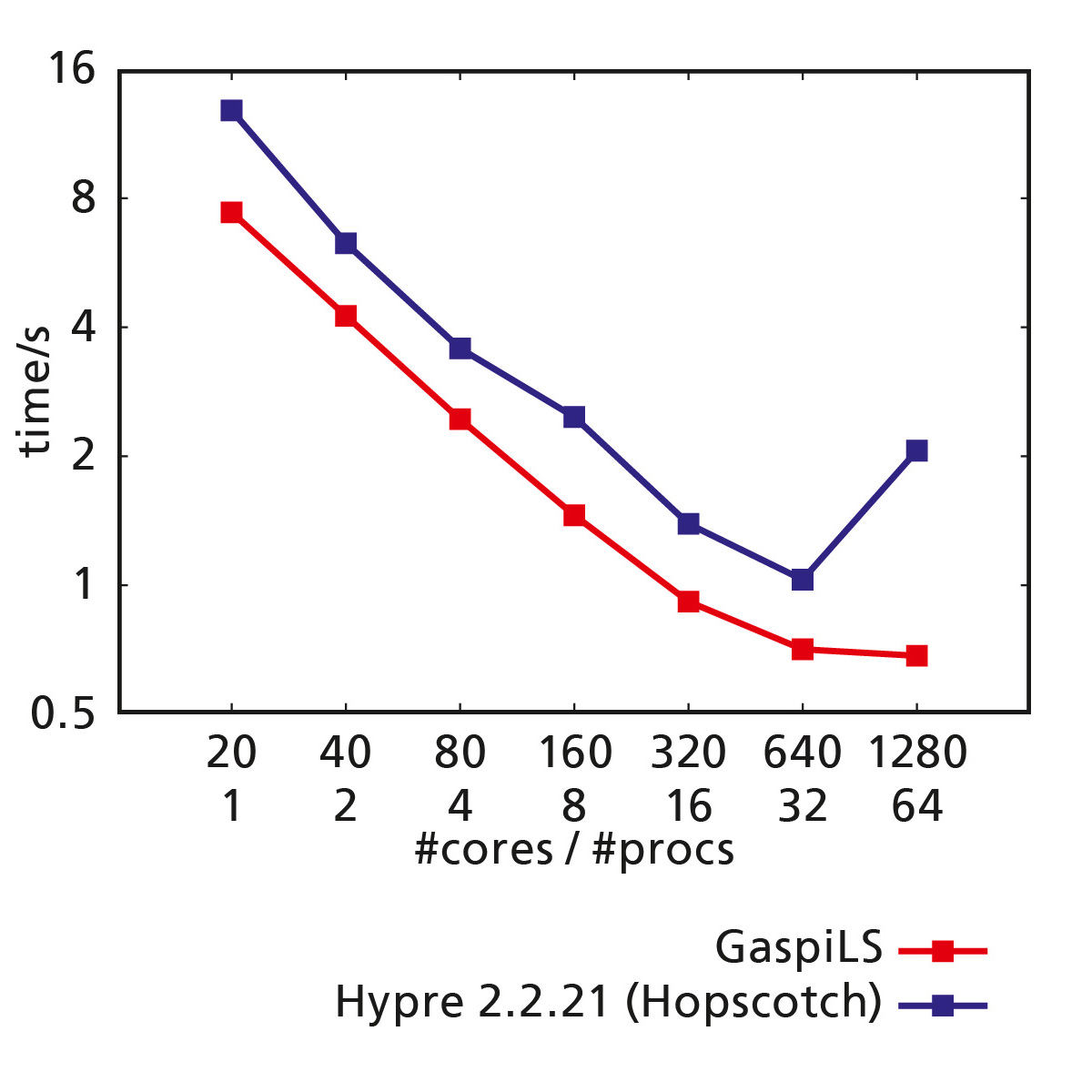

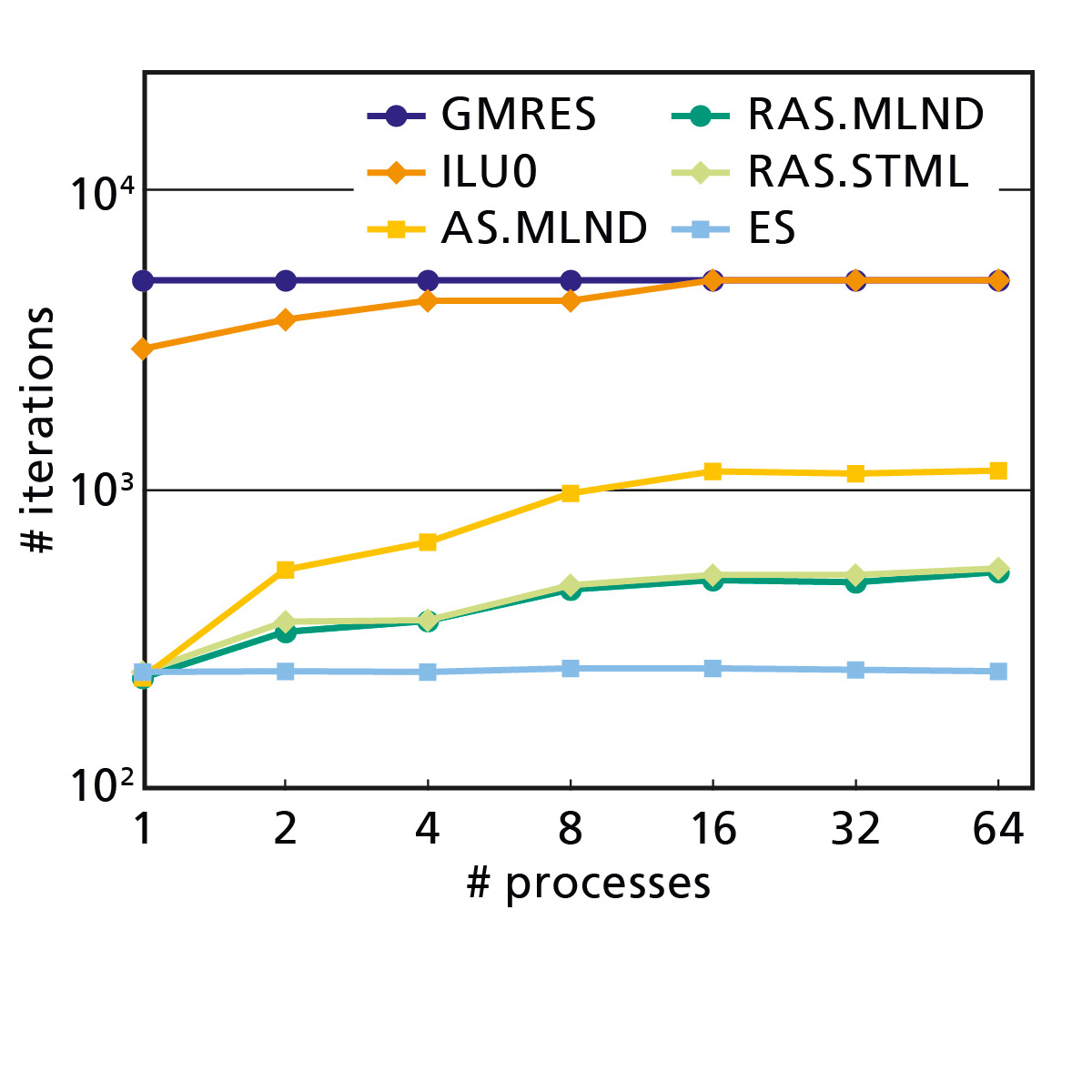

Scalability is considered a measure of parallel efficiency in an implementation. The optimum is the so-called linear scalability. This corresponds to the full utilization of computing resources, i.e. the cores within one or more CPUs connected via a network. Improved scalability enables more computing capacity to be used profitably.

In implementation, the following advantages result:

- more detailed models

- more accurate parameter studies

- cost-efficient utilization of hardware resources