When planning chemical plants, a large number of specifications, settings and objectives have to be taken into account. For example, operating and investment costs should be kept as low as possible, while at the same time producing products of the highest possible quality. In addition, there are environmental and safety requirements that must be met.

To meet these requirements, the process engineer must not only compare the various settings of a plant in his planning, but also different plants that produce the same end products from the raw materials in different processes. This is a difficult task, especially for complex plants with a large number of apparatuses.

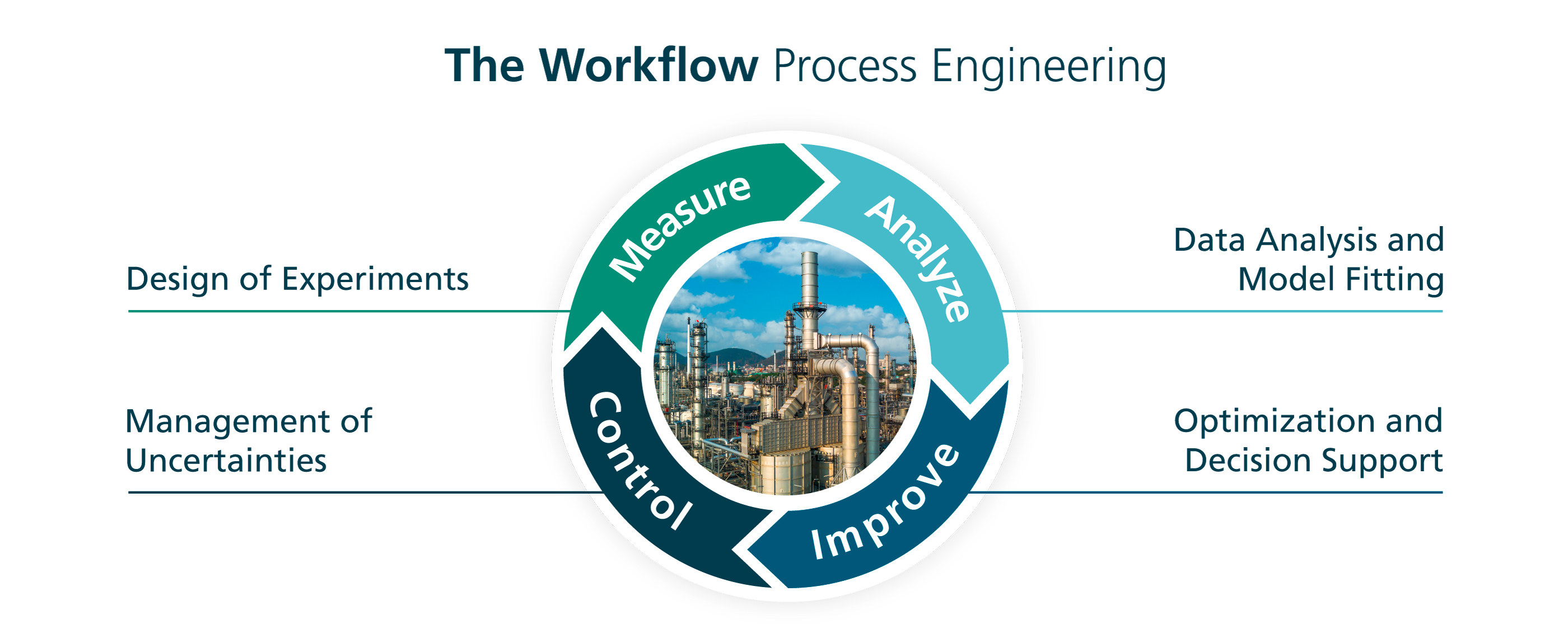

This planning process is done with the use of computer-aided simulations, in which the best possible settings and plant designs are found using the engineer's experience and empirical search. However, given the complexity of the problem, the best settings for the desired goals and objectives will not be found without a transparent optimization strategy.